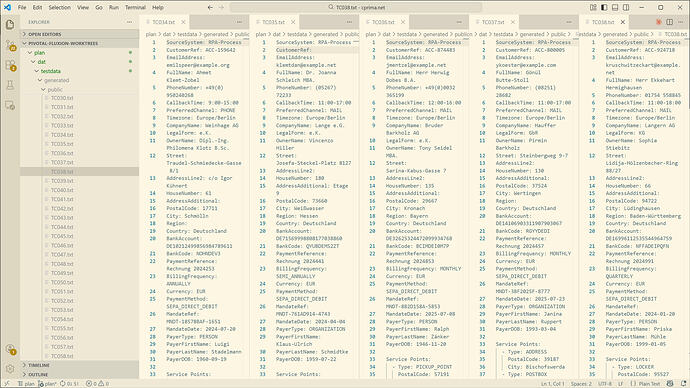

Transform the data model (LLM-extracted from an unstructured clickpath, and manually amended) into something that LLM coding agents can work with.

The target system to automate handles data in an object-relational mapper, so I move from the dot notation to a proper nested object representation.

plan/dat/data-model/incoming/data-model.schema.json

{

"$schema": "https://json-schema.org/draft/2020-12/schema",

"$id": "https://example.com/schemas/data-model/v1.0.0",

"title": "Data Model Record",

"description": "Schema for a single data model record representing customer/account data with contacts, addresses, payment information, and service points.",

"type": "object",

"properties": {

"record": {

"type": "object",

"properties": {

"sourcesystem": {

"type": "string",

"default": "RPA-Process",

"x-tier": "defaulted",

"description": "Upstream system code from which the record originates; used for routing and audit."

},

"createdat": {

"type": "string",

"format": "date-time",

"x-tier": "generated",

"description": "ISO‑8601 timestamp when the record was created in the system of record."

},

"updatedat": {

"type": "string",

"format": "date-time",

"x-tier": "generated",

"description": "ISO‑8601 timestamp of the last material update; supports incremental sync."

},

"version": {

"type": "integer",

"x-tier": "generated",

"description": "Monotonically increasing revision counter for optimistic concurrency control."

}

},

"additionalProperties": false

},

"account": {

"type": "object",

"properties": {

"id": {

"type": "string",

"x-tier": "generated",

"description": "Opaque internal unique identifier of the account."

},

"number": {

"type": "string",

"x-tier": "trigger",

"description": "Human-readable account/customer reference used for billing and reconciliation."

},

"externalreference": {

"type": "string",

"x-tier": "enriched",

"description": "Optional partner-side mapping reference used for cross-system correlation."

}

},

"additionalProperties": false,

"required": [

"number"

]

},

"contact": {

"type": "object",

"properties": {

"email": {

"type": "string",

"format": "email",

"x-tier": "trigger",

"description": "RFC-compliant email address; validated and normalized."

},

"fullname": {

"type": "string",

"x-tier": "trigger",

"description": "Display name composed from salutation/title/given/family name."

},

"phone": {

"allOf": [

{

"$ref": "#/$defs/phoneNumber"

}

],

"x-tier": "trigger",

"description": "Primary fixed-line phone number; canonical format recommended."

},

"callbackwindow": {

"type": "string",

"x-tier": "trigger",

"description": "Free-text or structured callback availability window; stored as opaque payload."

},

"preferredchannel": {

"type": "string",

"enum": [

"PHONE",

"EMAIL",

"MAIL"

],

"default": "PHONE",

"x-tier": "defaulted",

"description": "Preferred communication channel (PHONE, EMAIL, MAIL)."

},

"timezone": {

"allOf": [

{

"$ref": "#/$defs/timezone"

}

],

"default": "Europe/Berlin",

"x-tier": "defaulted",

"description": "IANA time zone identifier for local-time interpretation."

}

},

"additionalProperties": false,

"required": [

"email",

"fullname",

"phone",

"callbackwindow"

]

},

"organization": {

"type": "object",

"properties": {

"name": {

"type": "string",

"x-tier": "trigger",

"description": "Registered legal name of the organization."

},

"legalform": {

"type": "string",

"x-tier": "trigger",

"description": "Legal entity type (e.g., GmbH, AG)."

},

"ownername": {

"type": "string",

"x-tier": "trigger",

"description": "Owner/shareholder name(s), including special cases."

},

"vatid": {

"type": "string",

"x-tier": "enriched",

"description": "VAT identification number; validated where possible."

},

"taxid": {

"type": "string",

"x-tier": "enriched",

"description": "Local tax identification number."

},

"registrationnumber": {

"type": "string",

"x-tier": "enriched",

"description": "Official commercial register number."

},

"peppolid": {

"type": "string",

"x-tier": "enriched",

"description": "PEPPOL participant identifier for e-invoicing."

}

},

"additionalProperties": false,

"required": [

"name",

"legalform",

"ownername"

]

},

"address": {

"type": "object",

"properties": {

"addressline1": {

"type": "string",

"x-tier": "trigger",

"description": "Primary address line including street and building details."

},

"addressline2": {

"type": "string",

"default": "",

"x-tier": "defaulted",

"description": "Optional secondary line (suite, floor, c/o)."

},

"housenumber": {

"type": "string",

"x-tier": "trigger",

"description": "Building/house number including suffixes."

},

"additional": {

"type": "string",

"x-tier": "trigger",

"description": "Additional address information not captured elsewhere."

},

"postalcode": {

"allOf": [

{

"$ref": "#/$defs/postalCode"

}

],

"x-tier": "trigger",

"description": "Postal/ZIP code; stored as string to preserve formatting."

},

"city": {

"type": "string",

"x-tier": "trigger",

"description": "City or locality name."

},

"region": {

"type": "string",

"default": "",

"x-tier": "defaulted",

"description": "Administrative region (state/province)."

},

"country": {

"type": "string",

"x-tier": "trigger",

"description": "Display country/region label."

},

"countrycode": {

"allOf": [

{

"$ref": "#/$defs/countryCode"

}

],

"x-tier": "enriched",

"description": "ISO‑3166‑1 alpha‑2 country code."

}

},

"additionalProperties": false,

"required": [

"addressline1",

"housenumber",

"additional",

"postalcode",

"city",

"country"

]

},

"payment": {

"type": "object",

"properties": {

"iban": {

"allOf": [

{

"$ref": "#/$defs/iban"

}

],

"x-tier": "trigger",

"description": "International Bank Account Number; validated via checksum rules."

},

"bic": {

"allOf": [

{

"$ref": "#/$defs/bic"

}

],

"x-tier": "trigger",

"description": "Bank Identifier Code (SWIFT)."

},

"bookingtext": {

"type": "string",

"x-tier": "trigger",

"description": "Booking/reference text included with payment transactions."

},

"schedule": {

"type": "string",

"enum": [

"TEN_DAYS",

"MONTHLY",

"QUARTERLY",

"SEMI_ANNUALLY",

"ANNUALLY"

],

"x-tier": "trigger",

"description": "Billing frequency (MONTHLY, QUARTERLY, etc.)."

},

"currency": {

"allOf": [

{

"$ref": "#/$defs/currencyCode"

}

],

"default": "EUR",

"x-tier": "defaulted",

"description": "ISO‑4217 three‑letter currency code."

},

"method": {

"type": "string",

"enum": [

"SEPA_DIRECT_DEBIT",

"BANK_TRANSFER",

"CREDIT_CARD",

"INVOICE"

],

"default": "SEPA_DIRECT_DEBIT",

"x-tier": "defaulted",

"description": "Payment method (SEPA_DIRECT_DEBIT, BANK_TRANSFER, etc.)."

},

"mandatereference": {

"type": "string",

"x-tier": "trigger",

"description": "SEPA mandate reference; unique per creditor."

},

"mandatesignaturedate": {

"type": "string",

"format": "date",

"x-tier": "defaulted",

"description": "ISO‑8601 date when the SEPA mandate was signed."

}

},

"additionalProperties": false,

"required": [

"iban",

"bic",

"bookingtext",

"schedule",

"mandatereference"

]

},

"payer": {

"type": "object",

"properties": {

"id": {

"type": "string",

"x-tier": "generated",

"description": "Internal identifier of the payer entity."

},

"type": {

"type": "string",

"enum": [

"PERSON",

"ORGANIZATION"

],

"x-tier": "trigger",

"description": "Payer classification (PERSON, ORGANIZATION)."

},

"firstname": {

"type": "string",

"x-tier": "trigger",

"description": "Payer given name."

},

"lastname": {

"type": "string",

"x-tier": "trigger",

"description": "Payer family name."

},

"dateofbirth": {

"type": "string",

"format": "date",

"x-tier": "trigger",

"description": "Payer date of birth in ISO‑8601 format."

}

},

"additionalProperties": false,

"required": [

"type",

"firstname",

"lastname",

"dateofbirth"

]

},

"servicepoint": {

"type": "array",

"items": {

"type": "object",

"properties": {

"id": {

"type": "string",

"x-tier": "generated",

"description": "Internal identifier of a service point."

},

"externalreference": {

"type": "string",

"x-tier": "enriched",

"description": "External mapping reference for the service point."

},

"enabled": {

"type": "boolean",

"x-tier": "trigger",

"description": "Flag indicating if the service point is active."

},

"type": {

"type": "string",

"enum": [

"POSTBOX",

"ADDRESS",

"PICKUP_POINT",

"LOCKER"

],

"x-tier": "trigger",

"description": "Type/category of service point (e.g., postbox, address)."

},

"identifier": {

"type": "string",

"x-tier": "trigger",

"description": "Identifier/number of the service point."

},

"postalcode": {

"allOf": [

{

"$ref": "#/$defs/postalCode"

}

],

"x-tier": "trigger",

"description": "Postal code associated with the service point."

},

"city": {

"type": "string",

"x-tier": "trigger",

"description": "City/town for the service point."

}

},

"required": [

"enabled",

"type",

"identifier",

"postalcode",

"city"

],

"additionalProperties": false

},

"description": "Array of service points associated with the account.",

"minItems": 1,

"x-tier": "trigger"

}

},

"additionalProperties": false,

"required": [

"account",

"address",

"contact",

"organization",

"payer",

"payment",

"servicepoint"

],

"$defs": {

"iban": {

"type": "string",

"pattern": "^[A-Z]{2}[0-9]{2}[A-Z0-9]{4,30}$",

"description": "International Bank Account Number (IBAN)"

},

"bic": {

"type": "string",

"pattern": "^[A-Z]{4}[A-Z]{2}[A-Z0-9]{2}([A-Z0-9]{3})?$",

"description": "Bank Identifier Code (BIC/SWIFT)"

},

"countryCode": {

"type": "string",

"pattern": "^[A-Z]{2}$",

"description": "ISO 3166-1 alpha-2 country code"

},

"currencyCode": {

"type": "string",

"pattern": "^[A-Z]{3}$",

"description": "ISO 4217 three-letter currency code"

},

"phoneNumber": {

"type": "string",

"pattern": "^\\+?[0-9\\s\\-\\(\\)]{6,20}$",

"description": "Phone number in international or local format"

},

"postalCode": {

"type": "string",

"pattern": "^[A-Z0-9\\-\\s]{3,10}$",

"description": "Postal/ZIP code"

},

"timezone": {

"type": "string",

"pattern": "^[A-Za-z]+(?:_[A-Za-z]+)?/[A-Za-z0-9_+-]+$",

"description": "IANA timezone identifier (e.g., Europe/Berlin)"

}

},

"allOf": [

{

"if": {

"properties": {

"payment": {

"properties": {

"method": {

"const": "SEPA_DIRECT_DEBIT"

}

},

"required": [

"method"

]

}

}

},

"then": {

"properties": {

"payment": {

"required": [

"mandatereference",

"mandatesignaturedate"

]

}

}

}

}

]

}

The script is in source control, prepared for the development easily re-created on different developer machines.

This is all still initial “plan” solution design. Any data structure is subject to change. But I never hesitate to put it in code even early.