Essentially we have a storage bucket with 100000+ files (don’t ask..). We want to set up an automation to delete files older than 3 months. I don’t see a simple way to go about this (haven’t looked at the API yet). Is there a simple way to do this?

Do you have any date identifier in the file name?

If not, I don’t see how to achieve this as List Storage Files activity doesn’t output dates

How are your files stored on storage bucket? What naming convention are you guys using? Do you append datetime in the file name while uploading?

Here’s what we do:

- We save exception screenshots on storage bucket. And as you know those can pile up alot over a period of time. So, we include datetime in the screenshot so that we are able to fetch the files older than last 3 months, and have those deleted.

Surprisingly, as of now, when we list files from storage bucket, it only returns below info:

StorageFileInfo { FileFullPath=“CS_20240722.033503.png”, StorageContainerFolderPath=null, StorageContainerName=“SB_Exceptions_Screenshots” }

I remember, there was feedback also submitted to UiPath for including more details on storage bucket. As of now, if there is no datetime included in file name, even looking at storage bucket, we can’t tell when was it uploaded?

So, with whatever functionality is exposed right now, i would do the following:

- List storage files - use filter to correctly filter the files based on datetime in its name.

- then loop through them and inside loop, have delete storage file activity to delete those one by one.

I don’t think, as of now, there is any way to bulk delete those files via studio.

Hope this helps.

Regards

Sonali

These are create via document understanding activities. So we are using the storage bucket to store those type of files.

They look like :

No date time is included in them. ![]()

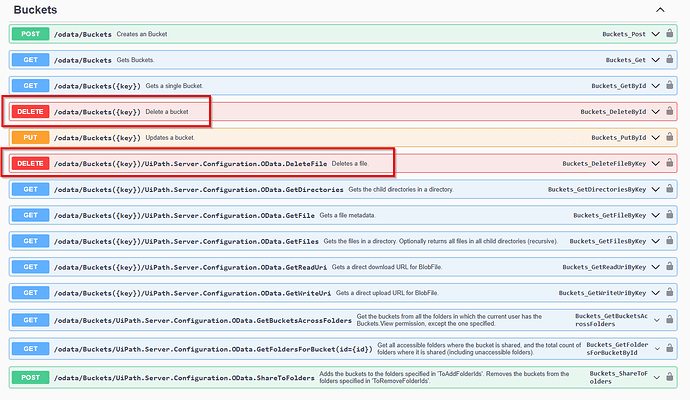

I checked its api also. Its either delete the whole bucket or delete a single file.

Regards

Sonali

oopsie oopsie…

In that case, I am afraid, there is no way to hand pick certain files for deletion.

Its either all or none.

You can consider something like below, but that’s also not gonna be an easy job:

- filter your doc understanding job runs accordingly

2)then if in your document understand processes, there are any logs/records of the folder ids that were pushed to storage bucket. Pick those ids. - Have the list created for all these folder ids that were created during that time range of job runs.

4)then use list storage files accordingly to get the list of files for only those folder ids - and delete.

I am sorry, there isn’t anything else in my mind that can help smoothen this task.

Not having detailed file info is a challenge as of now with storage buckets, we also have faced inconvenience many times due to this missing feature.

Regards

Sonali

If your query is resolved, kindly mark the post as solution so this topic can be closed.

Regards

Sonali

The Storage buckets literally don’t have a date tag in their metadata, so you are kind of screwed here.

If you have active actions that might be related to these documents then its pretty hard to tease them out and not delete them but delete the others.

Yea, shame about no time / date tag anywhere.

I created a second storage bucket, redirected item there and planning on deleting the entire previous storage bucket. Just to get a clean slate lol.

And that was the reason why I included timestamp in the document name.

Cheers

We also include timestamp in every file we upload to storage bucket.

I think it is possible to clean it up without being disruptive. I’d need to look at how well some of the filtering works in the Task API, I could make a solution accelerator for it at some point.

I’m still trying to wrestle back control of my organizations marketplace account to post things.

The challenge is, document understanding artifacts do not contain timestamps, each validation action adds about 5-7 documents and they using GUID’s to identify themselves, there is zero timestamp data in them so its very hard to clean up.